The recent government White Paper on AI regulation does little to calm nerves as the furore over Artificial Intelligence and chatbots gains pace

We are concerned by feedback from across industry that the absence of cross-cutting AI regulation creates uncertainty and inconsistency which can undermine business and consumer confidence in AI, and stifle innovation.’ So says the government’s recent White Paper on AI which goes on to propose a regulatory framework for the AI landscape.

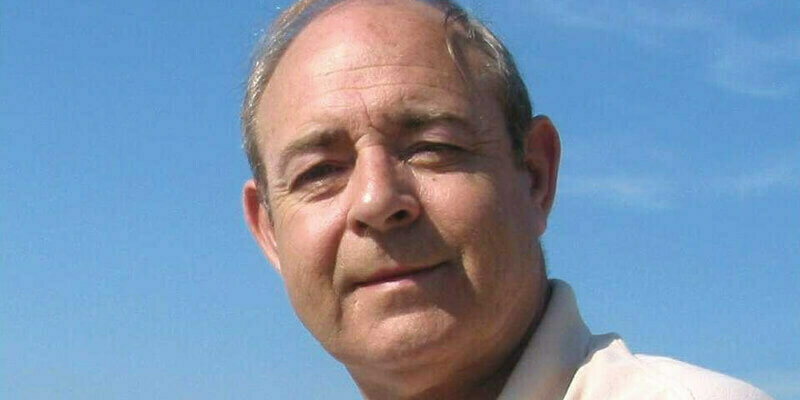

And just as Seppe Cassettari voices a warning on page 51 of this issue, so the Adam Smith Institute is yet to be convinced of measures intended to make Britain an ‘AI Superpower’. While broadly welcoming the White Paper, it urges caution. “We shouldn’t be risking inventing a nuclear blast before we’ve learnt how to keep it in the shell. We must make sure AI is used safely and responsibly to bring about a better world - not thinking of safety as an afterthought,” argues Connor Axiotes, the Institute’s Research Lead on Resilience, Risk, and State Capacity.

Chatbot algorithms are already so complex that their creators want to hide that complexity so that users can interact with them as easily as performing a Google search. The risks of disinformation and misinformation are evident, hence the drive for regulation. The danger is that AI rules being proposed in Britain, the EU and elsewhere may simply not be ready or sufficiently robust to help users decide between what’s real or not, and what’s good or bad.